The spreading of technologies for automation in domestic and work environments, and the evolution of the access modalities to digital information and services, allow the collection of data concerning the behaviors of individuals and communities. The developments of AI and technologies such as those concerning speech recognition, natural language processing, and gestural interaction, reduce the cognitive efforts of the interaction with digital products, so encouraging their ubiquitous use, and increasing the continuous exchange of personal data with the Internet. In the physical/digital world that we are building, we are constantly producing personal data that are collected and elaborated in the internet with several purposes. We produce data carrying a cell phone, driving a car, using most payment systems, exchanging digital messages, activating appliances, googling for information, accessing a care center for healing or performing several other activities. The correct management of personal data is a delicate and critical task, also requiring dedicated thinking, and the definition of convenient design principles about personal needs in terms of privacy, safety, security and freedom. In this context it is important to discuss the role of storytelling and of literary Science Fiction in the design oriented discussion of technology based scenarios, and on the anticipation of the consequences of design choices in the creation of innovative solutions. In the design of technology based solutions, the creation of scenarios and their representation through video storytelling is a consolidated practice. My 2 cents here is the description of some Ethic-oriented Reference Scenarios through storytelling as an effective way to explain innovative solutions and to illustrate their advantages and criticalities projecting their application in a future in which these solutions act and have an agency.

Ethic-oriented

Reference Scenarios

Using Sci-fi vision of the future to create Ethic-oriented Reference Scenarios as a base for designing interactive solutions implying personal data and information.

Year

2017

Reference Lab

IEX Design Lab

Perfect Humanity

Gattaca (1997) – Eugenics is the norm in a world where people are discriminated according to their genetic ‘validity’.

Technology can be seen as a mean to create a ‘Perfect Humanity’ through the capture and analysis of information related either to the biological characteristics of single individuals (such as genetic and biodata) or to subjective behaviors, so to identify patterns or anomalies. This aim requires data crossing to compare the data of single persons with those of other people, so to discover problems and enact actions to correct anomalies. While the utopian purpose is the improvement of life, the dystopian point of view reveals risks of discrimination: the same phenomena that we can see in communities discriminating people with visible anomalies or behaviors that deviate from the standard. The unceasing pursuit of a perfect humanity leads to exclusion, lack of freedom and to the inability of a person to hide intimate information in the contexts they live. An example of this situation can be found in the currently available applications for the tracking of eating habits. These services rely on self-tracking information uploaded by the users as food logs, and on the possibility to employ fitness devices, as wristbands, to track biodata in real time (such as hart bitrate, sweating, GPS information, and so on). These services detect our personal bio-information and make comparisons with the average data of all the users, proposing ‘right goals’ to pursue. Despite the potentials of these solutions to encourage convenient lifestyles, some questions arise while they are launched on the market. Who can see and use the information? How long these data will be stored and conserved? Which are the rules about privacy and private property of data? How can we guarantee the suitability of data taken as a reference? How can we guarantee that personal information will not be used against the general interests of the user?

Pervasive Awareness

Minority Report (2002) – Billboards in the mall recognize you and call you by your name tailoring the advertising just for you.

Technologies can track our activities helping us to identify our bad or good behaviors and performances. We can track the activities by ourselves or let biosensors and IoTs do this work on our behalf. The automated self-tracking can improve our efficiency and lead to behavior change, helping us to make right choices, but it can also harm the quality of our life perturbing our self-perception and the perception of other people. The creation of ‘over-awareness’ can lead to even higher expectation about ourselves or indicate defects, making us worry about new (and maybe useless) problems. As an example, we can consider applications for the monitoring of productivity: some can detect the number of hours user spent by the users working on the computer, categorizing them in a productivity range. But is the ‘working hours’ ratio useful to the user or this can lead to a distorted perception on individual productivity?

Mnemonic

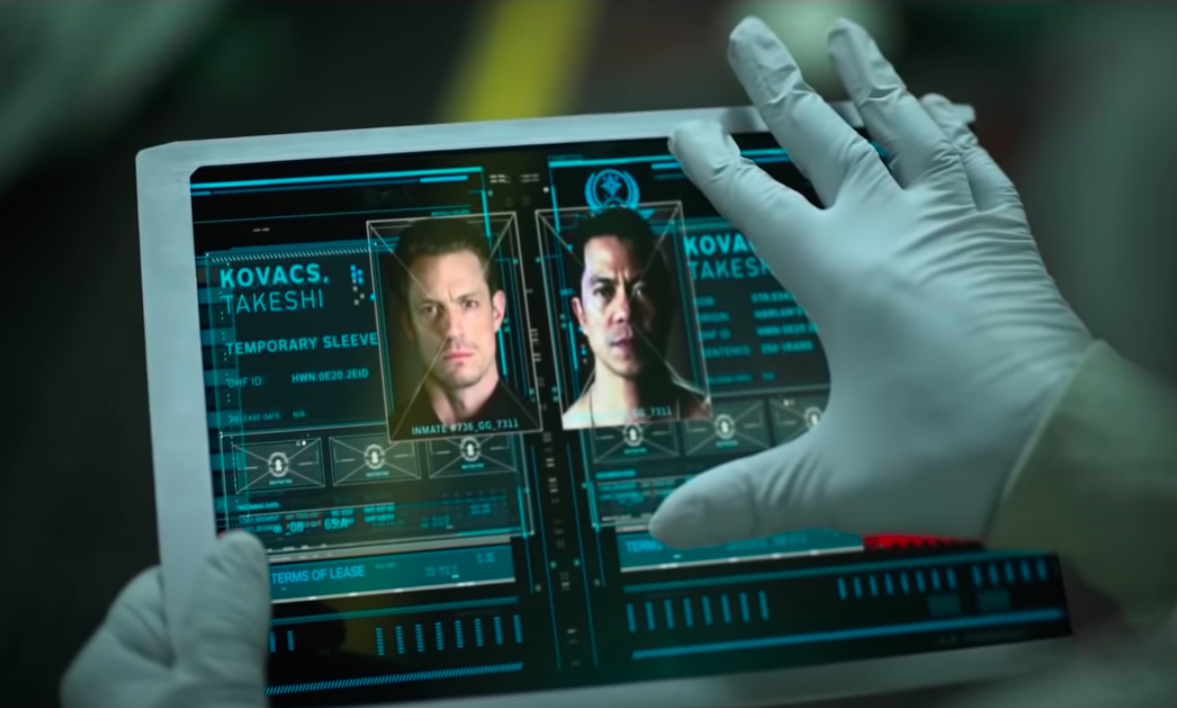

Altered Carbon (2018) – Consciousness of any individual is recorded on a device and transferred to a new body.

Human brains don’t have enough room to store all the facts we perceive. Brains select and store only main useful information. The idea of ‘Mnemonic’ is the dream to access information, anytime and everywhere, and to remember and keep the information thanks to the capacity of digital data storage. But the collection and storage of information in the Internet, in the objects and in the ambient leads to an annulment of the solace of oblivion due to the ubiquitous memory access. Should we always consider as a progress the fact that humans will no longer be able to forget? The content uploaded in the Internet, stays on the Internet, and we build permanent records about what we post online. We can see the risks of this approach when a sex tape of a Personal digital trails: toward a convenient design of products and services employing digital data person is uploaded online. The video will stay there, no matter what the involved person tries to do, it will remain forever online and this can ruin lives. We can easily find examples of people (specially women) that committed suicide for cyberbullying.

Super Monitor

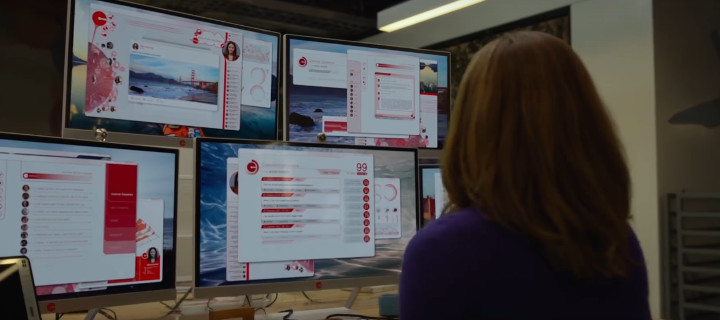

The Circle (2017) – Social media have the power of surveil and monitor people’s activities constantly.

Ubiquitous and pervasive computing, geo-located information and connected cameras allow systems to massive surveil and ‘sousveil’ people and their activities, so to increase security and safety, but also to predict events. Systems can monitor our position and video-record our movements; they can also interpret our actions, trying to detect bad intentions. These data can be used for tailored services and marketing, but from the dystopian point of view, massive control created by technologies reinforce hierarchies, erode privacy, widen inequalities of power and wealth due to the concentration of knowledge by specific individuals, organizations or companies. Should an home appliance understand what we are doing in our private moments to maintain us safe?. It can happen that a teenager usually goes to Target for daily shopping; one day her family receive a mail with discount and offers for pregnant girls. This is because the marketing approach of the company involve the understanding of the customers’ private habit, and the recent changes in shopping habits suggest that she is pregnant.

Automation Box

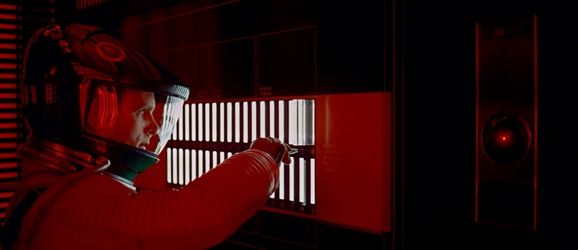

2001: a space odyssey (1968) – The supercomputer Hal act according to machine decision that are obscure to humans.

On the opposite ‘Pervasive awareness’, the ‘Automation Box’ represents the situation in which automatic systems and AI make humans be less worried about tasks, performances and actions, that are instead automatically performed by machines. The complete automation of activities leads not only to a lack of ‘human touch’ in performances, but also to machine decisions that can remain obscure to human minds. In extreme automation, users depend on software and must adapt. As an instance, we can consider automatic driving systems. Who is responsible for mistakes in automatic car driving? One of the most relevant issues at the moment is the mechanisms behind choices of AI, machine learning and neural networks. Even the programmers that built the algorithms don’t have control on them and don’t know how are they making choices. Artificial intelligences can make their own choices pursuing the goals they are built for, but what if to achieve these goals they have to make choices that run against us? Is easy to think that A.I. will sooner or later run out of human control because we will not understand its ‘thinking’.

Human Behavior Computer

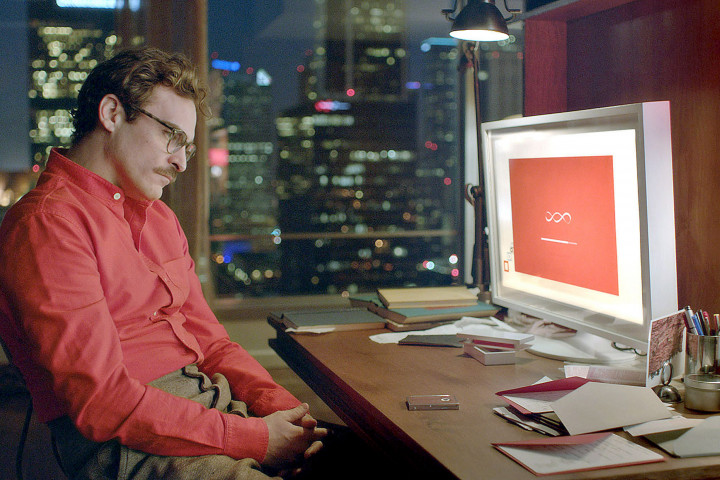

Her (2013) – The difference between computers and humans is blending due to the human-like behavior of the hyper tailored operative system.

AI, machine learning, genetics, ubiquitous and pervasive computing, and robotics and Micro/Nano Robotics aim to optimize tasks and automate actions learning from humans how to face problems, and to perform actions in order to lighten humans’ loads. ‘Bad Humanity Behavior Computer’ is the dystopia in which machine behaviors reflect the worst aspects of human nature. People begin blame the technology for the changes in lifestyle but also believe that technology is an omnipotence. It points to a technological determinist perspective in terms of reification. Machine learning is based on the analysis of human behaviors and ways of thinking. In literature, the technologies learn from bad humans’ behaviors, enhancing the potentiality of bad influences; they also can try to destroy us interpreting our approaches as a threat to our own survival or to the survival of others. In the real world, we can find examples of modern bad practices thinking to the cases in which blackmailers goods from retailers, menacing to publish bad reviews on their business. What if AI machines will learn from bad human behaviors? Personal digital trails: toward a convenient design of products and services employing digital data.

Stargate

The ender’s game (2013) – The difference between real and fictional world blends and the young gamers’ actions in virtual reality affect the real world.

Pervasive connection delete distances and the need of physical presence. We can do activities remotely thanks to ubiquitous presence of connected devices. We no longer need to be ‘there’ and ‘then’. But the contraction of space and time can harm our interpersonal communication, relationships, and communities in two ways: degradation of communication within social groups due to the increase of time spent using technologies; misleadingly interpretation of real contexts and spaces due to heightens of virtual space immersion. Humans can experience lack of participation in ‘real life’ actions as well as a disconnection from reality leading to the perception merging of real and unreal. Technologies change the way we find friends, love and create networks. People decide to meet after chatting on dating apps; this is an opportunity to creates a bridge between people, a portal through the space. Similarly, we can control home appliance remotely using phone, and check the pets through a camera.

Avatar

The Matrix (1999) – In a world dominated by machines, the only change of mental survival of humans is in a virtual Earth-like environment where people’s digital identities can live a normal life.

The creation of alternative worlds using virtual, augmented and mixed realities allow humans not only to be in a different place but also to be a different person. With an alternative identity, people can be free to be somebody different to who they are in the real world. They can enhance their experience and elicit emotions in these worlds through sensors’ (e.g. facial expression detection and voice recording and recognition), escaping reality. Technologies can also enable enhanced experience into the real world using robotics and Nano technologies to compensate human disabilities. So, the ‘Avatar’ leads to multiplication and confusion between identities allowing the creation of different digital identities, so splitting. People can date after chatting on dating apps, and even their avatar can date on virtual environment as Second Life or Ultima Online; we can talk with chat-bots that are hard to distinguish from real people. Recently an AI created a fake video of a speech by the ex-President of U.S.A Barack Obama that looks real. Will we, humans, be able to distinguish real contents from the fakes?

Further readings

- Dourish, P., & Bell, G. (2014). “Resistance is futile”: Reading science fiction alongside ubiquitous computing. Personal and Ubiquitous Computing, 18(4), 769–778. https://doi.org/10.1007/s00779-013-0678-7

- Dunne, A., & Raby, F. (2013). Speculative everything: Design, fiction, and social dreaming. The MIT Press.

- Johnson, B. D. (2011). Science Fiction Prototyping: Designing the future with Science Fiction. Morgan & Claypool.

- Lanier, J. (2013). Who owns the future? (First Simon & Schuster hardcover edition). Simon & Schuster.

- Linehan, C., Kirman, B. J., Reeves, S., Blythe, M. A., Tanenbaum, J. G., Desjardins, A., & Wakkary, R. (2014). Alternate endings: Using fiction to explore design futures. Proceedings of the Extended Abstracts of the 32nd Annual ACM Conference on Human Factors in Computing Systems – CHI EA ’14, 45–48. https://doi.org/10.1145/2559206.2560472

- Shapiro, A. N. (2016). The Paradox of Foreseeing the Future. Alan Shapiro. http://www.alan-shapiro.com/the-paradox-of-foreseeing-the-future-by-alan-n-shapiro/

- Varisco, L., Pillan, M., & Bertolo, M. (2017). Personal digital trails: Toward a convenient design of products and services employing digital data. 4D Design Development Developing Design Conference Proceedings, 180–188. https://doi.org/10.5755/e01.9786090214114